Why This Matters and Where Ezurio Fits

Selecting an edge AI platform is not just about hardware capability, it’s about how quickly and confidently teams can move from evaluation to deployment.

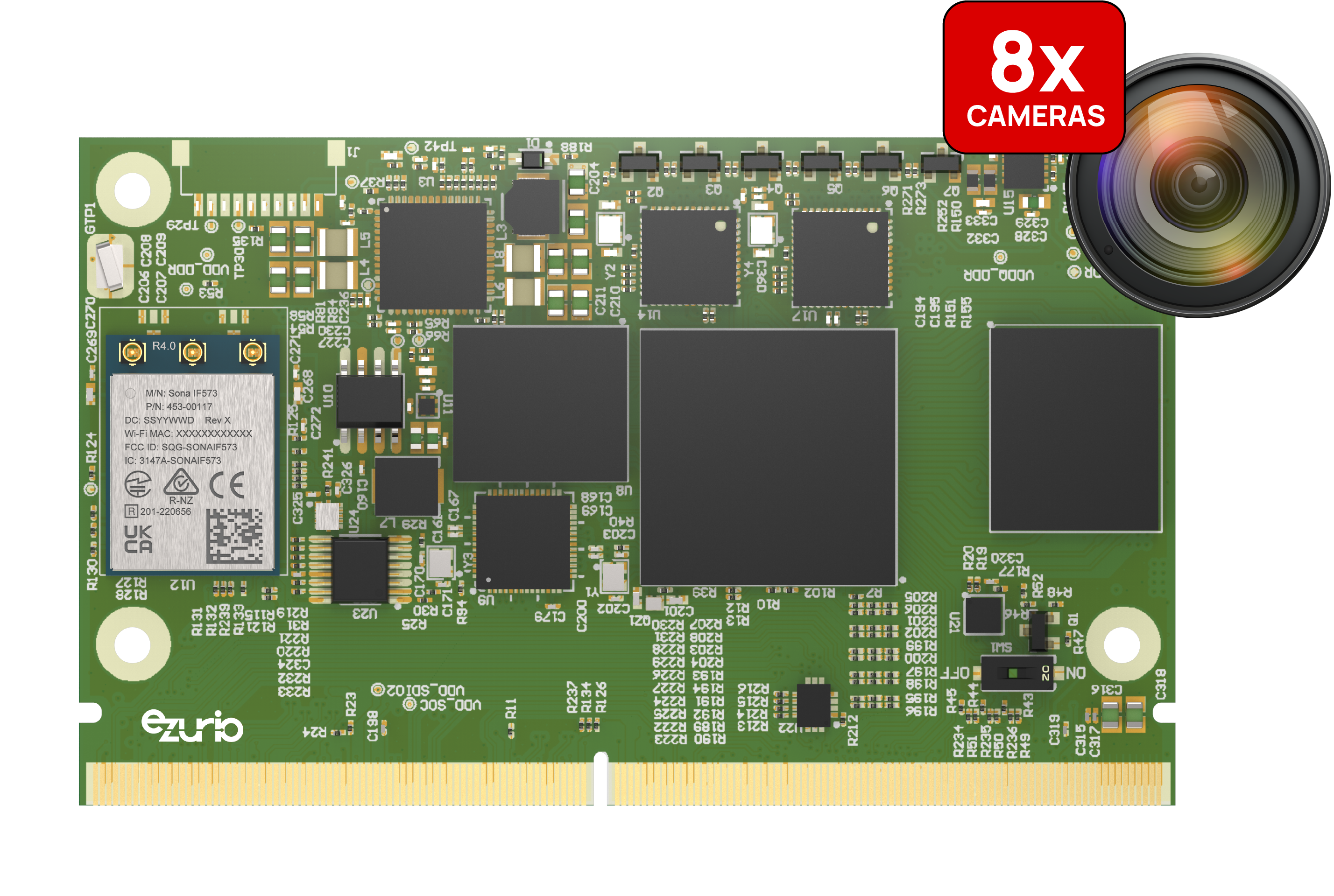

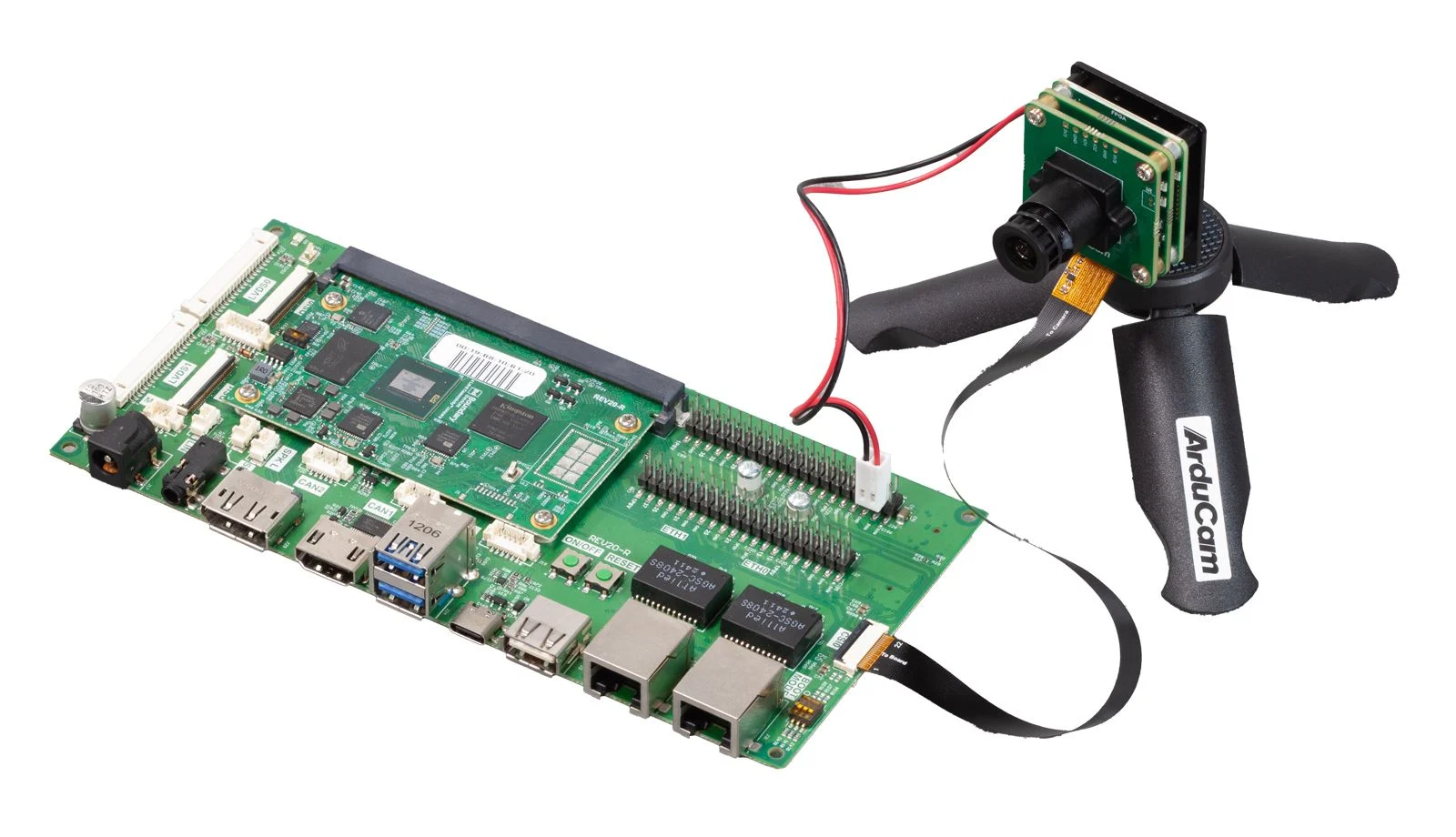

Camera selection and integration are often one of the biggest sources of delay in vision projects. Different sensors, drivers, and ISP implementations can force teams to rework software each time requirements change.

To reduce this friction, Ezurio partners with Arducam, a leader in embedded vision solutions. Arducam’s xISP camera modules use a standardized driver and ISP interface, allowing developers to evaluate and swap between cameras without rewriting software. Combined with plug-and-play hardware adapters and BSP-level integration, this approach removes much of the trial-and-error typically associated with camera selection.

Alongside Ezurio’s Connected SOMs, this system-level integration helps teams prototype faster, scale more easily, and reduce risk as vision requirements evolve.

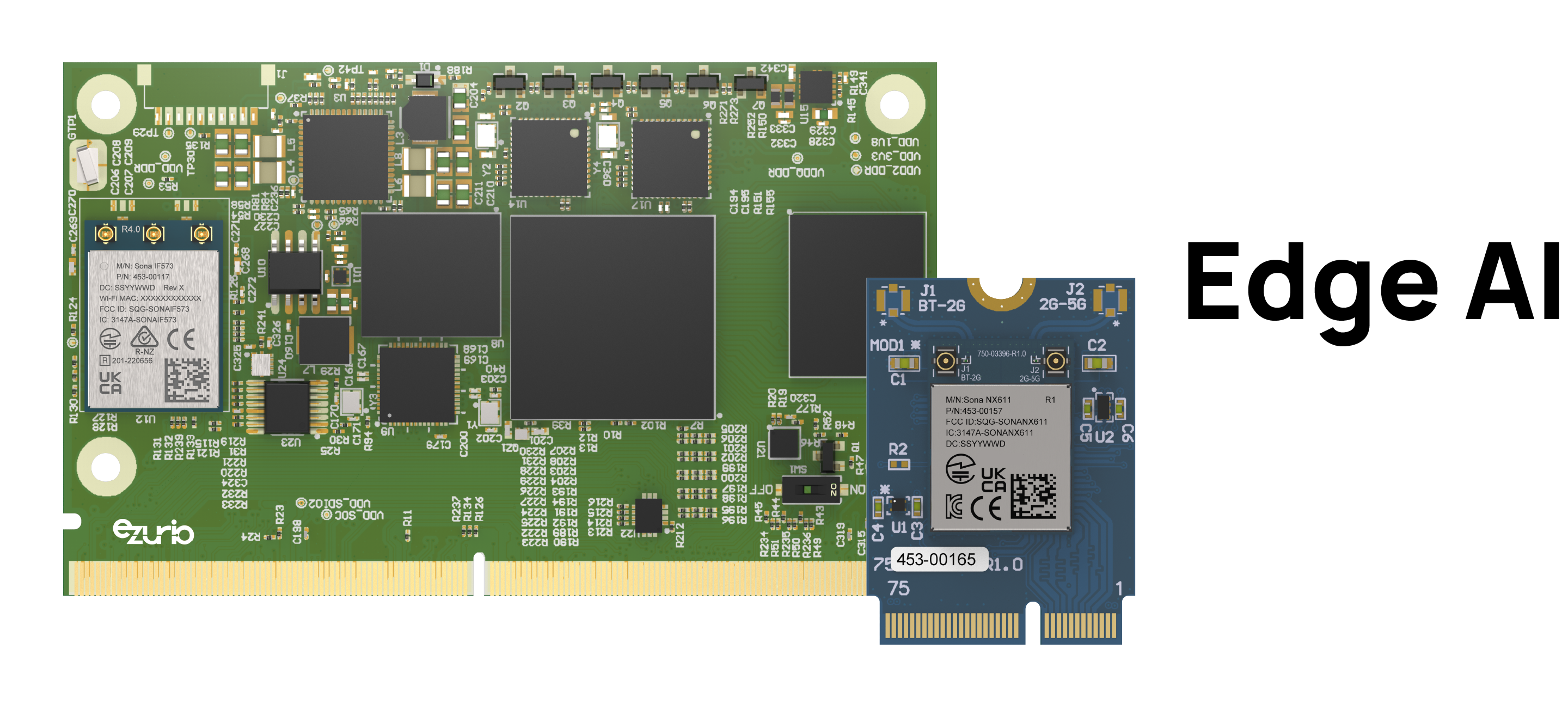

A Connected SOM combines the application processor and Wi-Fi from a single, trusted vendor, eliminating the complexity of sourcing, validating, and supporting connectivity separately. Ezurio enables customers to mix and match Wi-Fi 6 and 6E options with supported SOMs—leveraging pre-certified radios, proven integration expertise, and long-term support to select the right wireless solution for each application.