/filters:background_color(white)/2025-05/medica-tn.png)

How Ezurio Serves the Medical Industry

Medical devices have much more stringent requirements for continuous connectivity, and have greater certification challenges than those in other industries. In this video, Jeremiah Stanek explains...

Published on May 1, 2025

Embedded engineering is entering a new era in 2025. After decades of gradual evolution, the embedded systems field is now experiencing rapid, transformative changes. Devices are getting smarter and more connected, development workflows are becoming more automated and secure, and the tools we use (both hardware and software) are radically modernizing. In short, what used to work for an 8-bit standalone gadget now feels insufficient for an AI-powered edge device with continuous updates.

This article explores five key trends that are driving these changes in embedded engineering today. These aren’t mere buzzwords, but real movements reshaping how embedded engineers design, develop, and deploy systems. From the rise of AI-developed and AI-enhanced systems to the mainstream adoption of RISC-V, from an open-source software takeover to a shift toward memory-safe programming languages, and the integration of DevSecOps with hardware-in-the-loop testing, we’ll dive into each trend in depth. Along the way, we’ll look at technical insights, concrete examples, and the impact on development workflows, tooling, and design decisions. Whether you’re building firmware for microcontrollers or architecting complex IoT devices, these trends are defining the state of the art in 2025.

So, let’s jump in and examine the top trends in embedded engineering that every team should have on their radar this year.

The future of embedded software development is increasingly a collaboration between human engineers and artificial intelligence. In 2025, we are witnessing a surge in AI-driven development tools that can generate, test, and even debug embedded code. Large Language Models (LLMs) and code assistants (like GitHub Copilot and others) are being used to auto-generate firmware routines, suggest fixes, and optimize code. For example, Microsoft’s recent experiments with so-called “AI employees” hint at AI systems taking on technical tasks once reserved for developers.

In practical terms, an embedded developer might use an AI assistant to draft a device driver or to create unit tests, then refine and verify the output. This shifts the developer’s role towards being a system architect and code reviewer, guiding the AI’s work rather than writing every line from scratch. The mundane and repetitive coding tasks (e.g. writing boilerplate for peripheral setup or simple state machines) can be offloaded to AI, freeing engineers to focus on higher-level design and critical thinking. The result is a potential boost in productivity and shorter development cycles. However, this also raises important questions: How do we ensure AI-generated code meets strict quality and safety standards? Teams are establishing new practices for AI-in-the-loop development, such as requiring human review, using AI to generate test cases as well as code, and applying traditional static analysis to AI-written code. In essence, AI is becoming a powerful assistant in the embedded developer’s toolbox, but human oversight and domain expertise remain crucial to guarantee the reliability of the software produced.

At the same time, AI isn’t just helping build embedded systems – it’s increasingly integrated into the systems themselves. The year 2025 is seeing explosive growth in edge AI, where intelligence is embedded directly into devices rather than relying on constant cloud connectivity. This means everything from tiny microcontrollers to larger embedded processors are now running machine learning models locally. The motivation is clear: performing AI computations on-device can dramatically improve latency (real-time response without a round trip to the cloud), enhance privacy (sensitive data like audio or images need not leave the device), and even save bandwidth and energy by reducing communications.

We see edge AI deployed in a wide range of applications – from autonomous vehicles making split-second decisions, to smart home appliances with voice and vision recognition, to industrial sensors doing predictive maintenance on the factory floor. For example, predictive maintenance nodes can run a small neural network that learns the vibration signature of a motor and alerts when an anomaly suggests a failing part, all on the device itself without requiring an internet connection.

New software frameworks and tools are making these use cases more accessible. Frameworks such as TensorFlow Lite Micro and various TinyML libraries (e.g. TensorFlow Lite for Microcontrollers, Edge Impulse tools, etc.) allow developers to train models and deploy them on microcontrollers with limited memory and CPU. These tools often provide optimized kernels for common ML operations so that even a Cortex-M or RISC-V microcontroller can perform tasks like keyword spotting (simple voice recognition) or anomaly detection. For instance, running a fall-detection algorithm on a wearable device’s accelerometer data is now feasible in real time. On the hardware side, we’re also seeing microcontrollers and SoCs designed with AI acceleration in mind – including dedicated neural processing units (NPUs) or GPU cores optimized for inference.

Designing an embedded product may involve collecting training data and working with data scientists to build a model, then quantizing and deploying that model to run under tight resource constraints. It’s a different workflow than classic embedded coding, but one that is increasingly common. AI-enhanced systems also force design decisions about how much processing to do on the device versus in the cloud, how to update AI models in the field (a new kind of “firmware update”), and how to handle verification and validation for ML-driven features. The bottom line is that AI is both a development aid and a runtime feature of modern embedded systems. Engineers are leveraging AI to build code faster and to build smarter devices that can learn and make decisions on their own. This trend is truly forward-looking – as AI models become more efficient and hardware more capable, we can expect even tiny IoT devices to include some form of “machine intelligence,” and developers will increasingly operate in a co-pilot mode with AI assisting in the creation of software.

For years, the processor world was dominated by a few instruction set architectures (ISAs) like ARM and x86. RISC-V, the open-source ISA introduced in the last decade, was initially an academic curiosity and a tool for experimentation. But by 2025, RISC-V has exploded onto the mainstream embedded scene, evolving from an innovative idea to a widely adopted foundation for new chips.

RISC-V’s key selling point is that it is an open standard – developers and companies can use and extend it freely, without paying royalties. This has unlocked a wave of innovation and industry support that is now bearing fruit in real products. In fact, RISC-V processor shipments have already reached staggering numbers: over 10 billion RISC-V cores were shipped by 2023, and projections show these are not just development boards in labs – they are inside everyday devices. Many RISC-V cores today live in microcontrollers, IoT chips, and as auxiliary cores inside larger SoCs, quietly doing their jobs in wearables, SSD controllers, wireless chips, and more.

What’s driving this rapid rise? A big factor is industry backing from major players across the tech spectrum. Companies that traditionally relied on proprietary cores are embracing RISC-V to gain more control and cut costs. NVIDIA, for example, has been integrating RISC-V cores as management controllers in its GPUs for years, and expects to ship about a billion RISC-V cores in its products by the end of 2024. These RISC-V cores handle tasks like managing the GPU, scheduling work, and offloading certain driver functions.

Other giants are on board as well: at the 2024 RISC-V Summit in California, Google, Samsung, and NVIDIA, all shared the stage to discuss their commitments to RISC-V for future AI and embedded chips. When multiple tech titans align behind an architecture, it’s a clear signal that RISC-V is no longer niche – it’s poised to be a cornerstone of computing. In fact, more than 3,000 companies worldwide are actively developing RISC-V based solutions, ranging from startups building custom IoT chips to established semiconductor firms adding RISC-V product lines.

Even national governments are investing in RISC-V; for instance, China has poured significant funding (over a billion dollars) into RISC-V research and ecosystem development as part of an effort to reduce dependence on foreign IP. This global surge means that as an embedded engineer, you’re increasingly likely to encounter RISC-V in the wild – if you aren’t using a RISC-V based microcontroller or CPU already, chances are you will in the coming years.

For developers, RISC-V’s mainstreaming has several implications. On the positive side, it opens up a new world of flexibility. Because RISC-V is extensible, companies can tailor the ISA with custom instructions for specific workloads (for example, adding DSP or cryptography instructions) without breaking compatibility. This can yield more efficient chips for certain tasks, which you as a developer might leverage via specialized libraries.

The open nature also means a more transparent architecture; documentation and community contributions are widely available, often making it easier to understand how the processor works at a low level (no NDAs required to get the ISA manual!). Toolchain support for RISC-V is mature and robust – GCC and LLVM both have excellent RISC-V support, and standard embedded debuggers and IDEs handle RISC-V targets much like they do ARM. Operating systems and RTOSes have also kept pace.

You can run full Linux on 64-bit RISC-V processors today, and for microcontroller-class devices there’s support in FreeRTOS, Zephyr, mbed OS, and many others. For example, Zephyr RTOS, one of the rising open-source OS platforms, has ports for RISC-V that are regularly updated, and even reference hardware (like the SiFive HiFive boards) to get started.

One tangible example of RISC-V’s march to mainstream is the appearance of RISC-V cores in popular development boards and modules. An example of this is Arduino: the Arduino Cinque (a partnership with SiFive) and other upcoming boards are exploring RISC-V based microcontrollers, indicating that even the hobbyist and maker communities will have RISC-V options.

Commercial microcontrollers and SOCs from companies like Microchip Nordic Semiconductor (e.g. nRF54 series with RISC-V cores), Andes Technology, and NXP are increasingly offering RISC-V in their lineup alongside ARM cores. All this means engineers have more choice in processor selection than before. It might also mean learning some new tools or nuances – e.g., the RISC-V memory model and interrupt architecture, which differ from ARM’s, or new debug interfaces. Generally, though, RISC-V’s ecosystem has matured to the point that developing for a RISC-V MCU isn’t radically different from any other architecture.

From a strategic perspective, RISC-V’s rise is influencing design decisions at the project and company level. Some organizations are adopting RISC-V to avoid vendor lock-in and licensing fees associated with proprietary cores. If you’re designing a new product, you might consider a RISC-V based chip for the cost savings or for the long-term flexibility of being able to swap vendors (since multiple companies can make RISC-V chips). The cost reduction can be substantial – in some cases, using RISC-V has been reported to cut chip development costs by up to 50%, because companies can leverage common open IP and community contributions rather than paying for core licenses and building all software from scratch. Moreover, RISC-V fosters a collaborative community: academic researchers contribute new ideas (one statistic noted over 60% of new academic processor research in 2022 involved RISC-V), and those ideas can rapidly make their way into real implementations and open-source tooling. This communal innovation accelerates advancements in areas like security (e.g., new hardware security extensions) and performance (e.g., vector instruction set extensions for AI) that benefit everyone.

In summary, RISC-V in 2025 is no longer an experiment—it’s a reality that’s reshaping embedded hardware. Engineers should stay abreast of this trend by familiarizing themselves with RISC-V development boards and tools, and by keeping an eye on the growing ecosystem of vendors and open-source projects. It’s quite likely that the firmware you write in the near future could be running on a RISC-V core, if it isn’t already. The good news is that embracing RISC-V often means more openness and more choice in our engineering decisions, aligning well with the broader open-source trend discussed next.

In 2025, open-source software isn’t just a fringe option; it dominates the embedded industry across many domains. From operating systems and toolchains to middleware and development tools, open-source projects have become the default starting point for modern embedded development.

Consider this: if you begin a new embedded project today, chances are high you’ll reach for a Linux distribution or a real-time operating system like Zephyr RTOS or FreeRTOS, you’ll use GCC or Clang to compile, and you’ll leverage libraries off platforms like GitHub for functionality ranging from communication protocols (MQTT, Bluetooth stacks, etc.) to sensor drivers. This is a radical shift from years past, where many embedded products ran on vendor-supplied proprietary kernels, used closed-source compilers or IDEs, and often required purchasing expensive SDKs or licensing custom IP. Now, open-source solutions offer unparalleled flexibility and cost advantages, and they come with the collective support of a global community.

One driving factor for open-source dominance is the support from silicon vendors themselves. It has become a win-win scenario for chip manufacturers to embrace open-source ecosystems. By supporting an open-source OS or tool, a chip vendor can provide customers with a ready software stack at a fraction of the cost it would take to develop and maintain an equivalent proprietary stack. They can launch a new microcontroller or SoC and point developers to an existing open-source community for drivers, networking, and OS support, rather than writing everything in-house. This drastically cuts time-to-market for the silicon and makes the platform more attractive to developers (since it likely “just works” with Linux or Zephyr out-of-the-box).

We see this clearly with projects like Zephyr: originally initiated by the Linux Foundation, Zephyr is a small-footprint RTOS that now has support from major players (Intel, NXP, Nordic, STMicro, and many others are members of the project). Companies are contributing code for their peripherals and radios directly to Zephyr. The result: if you pick a board from one of these vendors, there’s a good chance you can pull the latest Zephyr release and have all basic functionality working immediately, without hunting for mysterious vendor libraries or jumping through licensing hoops. Zephyr’s growth is telling – it’s often touted as a prime example of where embedded software is headed, with an active community and hundreds of supported boards. The same story plays out with Linux in embedded: decades ago, embedding Linux was an exotic choice, but now with projects like Yocto, Buildroot, and Debian derivatives for ARM, using Linux on an embedded platform (when resources allow) is almost a no-brainer for anything that needs the richness of a full OS. It’s cost-free, well-tested, and packed with features that would be prohibitive for any single company to develop alone.

For developers, the open-source dominance means access to powerful tools and frameworks that level the playing field. A small startup can build an IoT product leveraging the same quality of OS kernel or networking stack that a big tech company would use, because both are drawing from the commons of open source. This allows engineers to focus on the differentiating parts of their product – the application logic, the unique features – rather than reinventing baseline software components. It also means that knowledge and skills are more transferable. If you’ve learned how to write a device driver for Linux or how to configure FreeRTOS, you can apply that knowledge across many projects and hardware types, rather than learning a new proprietary OS for each job. In terms of design decisions, teams are increasingly asking: “Is there an open-source package that does X, and should we contribute to or leverage that instead of building our own?” In most cases, the answer is yes.

Open-source middleware is everywhere: for example, need a file system for an embedded device? LittleFS and FATFS (open implementations) are widely used. Need crypto libraries for secure communication? mbedTLS or OpenSSL (depending on capability) are there. Even traditionally closed areas like cellular modem stacks have open-source options now (the Zephyr project includes a LTE stack, and there are community projects for LoRaWAN, etc.).

Our Canvas™ software suite enables rapid embedded development across our MCU-based wireless products. Cross-chipset middleware, easy-to-use wireless APIs, on-module scripting and intuitive desktop/mobile tools are all available to dramatically ease embedded development.

Another angle to open-source dominance is how it intersects with the security and quality of embedded software. With more eyes on the code, vulnerabilities can be caught and fixed faster – a crucial advantage as devices become networked and a target for attacks. It’s no coincidence that regulators and industry standards are starting to encourage (or even require) the use of well-maintained software components, which often are open source, as opposed to one-off proprietary code. We’ll talk more about security in the next section, but it’s worth noting that open source and security are allies here: knowing exactly what’s running on your device (and being able to audit it) is a big plus for trustworthiness.

Even in traditionally conservative, safety-critical domains (think automotive, medical, industrial control), where proprietary systems long held sway due to certification needs, we see open source making inroads. For instance, the Zephyr RTOS community has been actively working on achieving safety certifications (IEC 61508 and others) for its kernel, a move that could allow open-source software to be used in regulated safety-critical products with certification evidence. This is a significant development – it means down the line, even your pacemaker or car ABS might run an open-source OS that has been rigorously vetted, rather than an expensive proprietary RTOS.

To sum up, open-source software now forms the backbone of embedded systems development. For embedded engineers, this trend brings many advantages: lower costs, a rich ecosystem of libraries, faster development (since you start from a higher baseline), and community support. It does, however, come with responsibilities. Teams must stay on top of updates (to pull in security fixes), manage licenses properly, and sometimes contribute back to projects they rely on (to fix bugs or add necessary features). In 2025, ignoring open-source options is simply not feasible for most – you’d be reinventing wheels while your competitors speed by in cars built on community-driven code. Embracing this trend means thinking more like an integrator and contributor of open technology, rather than a solitary builder. The collaborative model of software development has firmly taken hold in embedded systems, and it’s unlocking efficiencies that are essential given the increasing complexity of our devices.

For decades, C reigned as the dominant language for embedded systems. Its low-level control, minimal footprint, and widespread availability made it the default. But in 2025, that dominance is facing significant challenges. Developers are increasingly adopting a wider range of modern languages—including memory-safe options like Rust and modern C++, and higher-level languages like Python (specifically MicroPython) for certain applications—to address the growing demands for security, maintainability, scalability, and developer velocity in embedded software.

Memory mismanagement has historically been the root cause of many embedded system failures, leading to issues like buffer overflows, null dereferences, use-after-free errors, and race conditions. In traditional C and older C++ (pre-C++11), preventing these vulnerabilities relied entirely on rigorous developer discipline—a considerable risk, especially for connected devices susceptible to remote exploits.

Rust directly confronts this with its innovative ownership model and compile-time checks, which effectively prevent entire categories of memory errors. Concurrently, modern C++ (C++17, C++20, and beyond) introduces safer abstractions such as smart pointers, standard library containers like std::vector, and constexpr evaluations that shift more checks to compile time. These advancements mitigate human error without compromising performance. (Python, being a managed language with garbage collection, inherently avoids these specific manual memory management issues, operating at a higher level of abstraction).

Rust has rapidly evolved from a niche language to a serious contender in embedded development. Designed for systems programming, it provides direct hardware access without requiring garbage collection, while simultaneously ensuring memory safety at compile time.

By 2025, Rust is already deployed in critical applications, including:

These are not merely experimental uses; real-world projects are leveraging Rust's compile-time guarantees to catch bugs that could otherwise remain undetected until deployment, with potentially severe consequences.

Rust also offers zero-cost abstractions, providing high-level expressiveness without incurring runtime overhead. Its ecosystem has matured significantly, with tools like embedded-hal offering hardware abstraction layers and a comprehensive crate ecosystem supporting diverse functionalities from sensor communication to Wi-Fi modules. Developers can seamlessly write interrupt handlers, manipulate registers, and even incorporate inline assembly when necessary, all while benefiting from Rust's robust safety features.

C++ has long been utilized in embedded systems, but historically, developers often restricted themselves to a conservative subset, avoiding features like exceptions, RTTI, and modern syntax. This was partly due to lagging toolchain support and the performance constraints of older hardware.

However, this landscape has changed dramatically. Contemporary microcontrollers are far more powerful, and compilers have advanced rapidly. C++17 and C++20 introduced transformative features such as constexpr (enabling compile-time computation), concepts (providing refined template constraints), modules (improving encapsulation and build times), and coroutines (facilitating asynchronous programming).

Modern C++ encourages the development of safer, more expressive code. Instead of managing raw pointers, developers now utilize containers and smart pointers. Complex state machines can be elegantly implemented using class-based patterns or coroutines. These features are not just syntactic improvements; they result in firmware that is easier to extend, debug, and maintain.

Furthermore, popular frameworks like Arduino, Mbed OS, and Zephyr are increasingly embracing modern C++ idioms. In essence, embedded C++ has matured significantly, and developers are realizing the substantial benefits.

Alongside lower-level languages, MicroPython has carved out a significant space, particularly for application-level code, prototyping, and educational purposes. As a lean implementation of Python 3, it brings the language's renowned ease of use, extensive libraries, and rapid development capabilities to resource-constrained devices.

While not typically used for hard real-time or lowest-level driver development where Rust or C++ might be preferred, MicroPython excels at handling sensors, networking, user interfaces, and overall application logic. Its interactive REPL (Read-Evaluate-Print Loop) allows for incredibly fast iteration and debugging directly on the hardware.

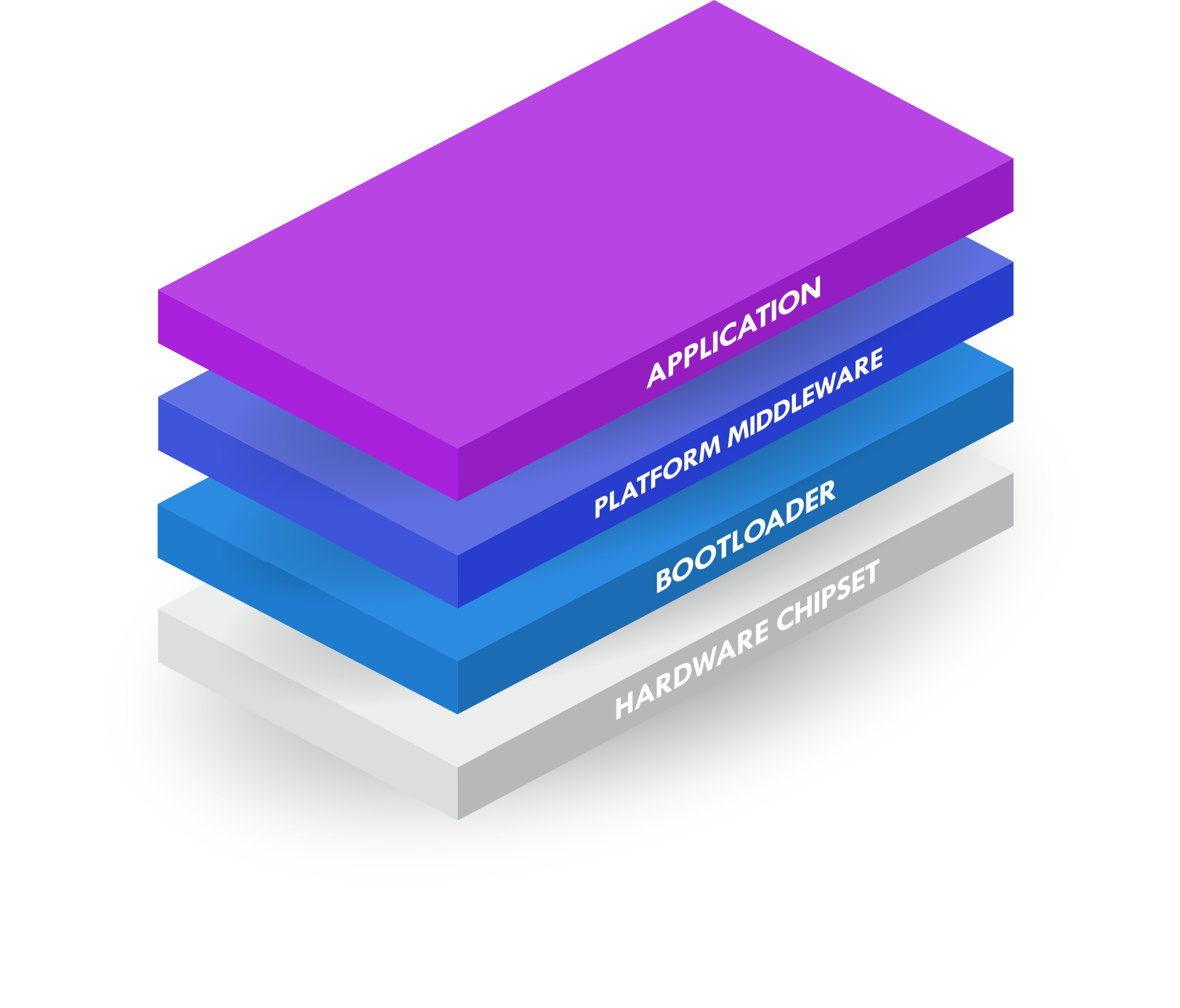

For many projects, a hybrid approach is emerging: using C, C++, or Rust for performance-critical drivers and real-time tasks, and MicroPython for the application layer, leveraging the strengths of each language. This allows developers to build complex functionality quickly while maintaining performance where it matters most. Ezurio's Canvas Software Suite takes this exact approach, building hardware drivers and networking stacks in C while empowering developers to orchestrate application logic with Python.

Historically, many embedded developers avoided modern languages out of fear that they’d bloat code or slow execution. While C and assembly remain the most resource-efficient options, in 2025, this concern holds less weight for many projects. Today's cost-effective microcontrollers possess greater RAM and processing speed than many early desktop computers.As one expert succinctly put it, "Silicon is cheap."

Languages like Rust and modern C++ are designed for systems programming and offer performance comparable to C, often allowing for better optimizations due to clearer semantics.

MicroPython, being an interpreted language (or bytecode compiled), naturally has a larger memory footprint and slower execution speed compared to compiled languages like C, C++, or Rust. However, for tasks that are not performance-critical or when development speed is paramount, its overhead is often acceptable. The increased resources available on modern hardware mean that even higher-level languages can now run effectively on devices that would have been C-only a decade ago. The choice of language increasingly depends on the specific requirements of each component of the system, balancing performance needs with development efficiency and maintainability.

Similarly, the use of Python within an embedded Linux environment faces the same performance challenges; however, tools like Cython can be used to achieve the readability and maintainability of Python with close to the performance of C (as demonstrated by Ezurio’s Summit RCM service).

The transition to modern C++, Rust, or the introduction of Python impacts more than just coding syntax; it requires teams to re-evaluate training methodologies, tooling, and even architectural approaches.

Rust, in particular, presents a steeper learning curve. Developers must grasp core concepts like ownership, borrowing, and lifetimes, which are often new to those with a C background. However, companies are actively investing in internal training programs and utilizing pilot projects to facilitate a safe and effective transition.

Python development in embedded systems leverages familiar tools and workflows, often involving simple editors and the REPL. The ecosystem provides libraries for hardware interaction, similar to Python on the desktop.

Toolchains are also adapting. IDEs and debuggers are expanding their support for new language features and debugging across language boundaries in hybrid projects. Essential tools like GDB, OpenOCD, and static analyzers are being updated to accommodate Rust and advanced C++ constructs, while tools specific to MicroPython aid in deployment and debugging. Build systems are modernizing; Rust benefits from Cargo, which provides integrated dependency management, while many C++ teams are migrating to CMake combined with package managers such as vcpkg or Conan, and Python projects rely on pip-like dependency management for their libraries.

This evolution results in more streamlined workflows and improved software architecture. Rust's enforced ownership rules promote clearer module boundaries. Modern C++ templates and classes enable the creation of generic hardware abstraction layers that are easily reusable across projects, reducing the reliance on macro-heavy C headers and redundant struct definitions. Python's clear syntax and modular nature facilitate building readable and maintainable application logic quickly.

Traditionally, developing embedded software was a slow, manual process: code is written, built perhaps on a developer’s machine, then manually flashed to a device on someone’s desk for testing, and releases might be packaged for manufacturing once in a blue moon. This is changing fast. Today, many teams treat firmware just like any other software project in terms of automation. They use CI servers (Jenkins, GitLab CI, etc.) to automatically build firmware on each code commit, run a suite of tests, and even package or deploy firmware updates continuously. This is a big cultural shift – it requires embedding testing hooks into your code, writing automated test cases for embedded logic, and sometimes writing PC simulators or emulators for parts of the system. The payoff is that bugs are caught early (right when a developer introduces them) and teams can iterate features with confidence that they haven’t broken existing functionality. In 2025, we see an even stronger push for such practices, with emphasis on observability and monitoring once devices are deployed.

One unique challenge in embedded as opposed to pure software is that testing can't happen independent of the hardware. You can unit test logic on your PC, but you can’t be sure the timing or hardware interactions are right until you run it on the target device. That’s where HIL testing comes in – and now it’s being automated. Imagine a lab setup with dozens of boards connected to a CI server. Whenever code is committed, the server builds the firmware, flashes it onto a test board, and runs a battery of tests by actually interacting with the hardware (pressing buttons, reading sensor values, etc.).

This is no longer imagination – it’s reality in many companies’ pipelines in 2025. Ezurio has implemented a CI system called TRaaS where an automated firmware builds and unit tests are run with GitHub Actions. Tests racks containing device-specific tests stations, comprised of a Raspberry PI running Robot Framework that facilitates flashing firmware images to the hardware, run the unit's tests to verify the code’s behavior on real hardware. This entire sequence is triggered automatically on each GitHub pull-request, catching issues immediately if, say, the timing of a signal is off or a peripheral isn’t initialized correctly. In larger scale setups, companies like Microchip have built farms of test boards: as one commenter noted, Microchip maintained a lab with essentially every model of their microcontroller plugged into a matrix of programmers and automated test scripts, so that any change to their toolchain or libraries would be validated on actual chips.

The availability of affordable platforms (Raspberry Pi as a CI controller, Arduinos or custom circuits as test harnesses) makes HIL CI feasible without huge investment. The benefit is enormous: you catch hardware-related bugs that pure simulation or unit tests would miss. For instance, you might discover a race condition where an interrupt arrives earlier than expected, or an analog sensor reading that your code wasn’t handling correctly – issues that manifest only on the real device. By incorporating HIL in CI, engineers can test complex interactions continuously, not just at the end of a project. It essentially moves integration testing much earlier (“shift left”), reducing late-stage surprises. “Shift left” means bringing something forward in time or workflow. The “shift left” concept also now applies to security, something GitHub touts heavily with their security offerings whereby you move security evaluation to the time of code commit vs further downstream. This comprehensive integration of development, security, and operations in embedded is a game-changer. It transforms embedded engineering from a slower, riskier endeavor into a more predictable and agile process. By 2025, any organization that wants to deliver reliable, secure embedded products at speed needs to adopt these DevSecOps and HIL testing practices. It might require up-front investment in setting up infrastructure and developing tests, but the cost is repaid many times over by preventing costly bugs, breaches, or recalls down the line. Indeed, industry reports emphasize that if you don’t automate and secure your embedded development pipeline, you’ll be left behind. The tools and techniques have matured to make this feasible, and the competitive landscape (and regulatory environment) demands it.

Embedded engineering in 2025 is markedly different from a decade ago. The five trends we’ve explored – AI’s infusion into development and devices, the rise of RISC-V, open-source software’s dominance, the move to memory-safe languages, and the adoption of DevSecOps with HIL testing – are collectively elevating the embedded world to new heights of capability and quality. Crucially, these trends do not exist in isolation; they reinforce one another. For instance, the complexity of AI and connected features would be unmanageable without modern languages and DevOps practices. The flexibility of RISC-V and the innovation it enables are amplified by the open-source ecosystems and community contributions surrounding it. The push for security in DevSecOps is aided by Rust’s safety guarantees and by transparent open-source code that many eyes can scrutinize. In essence, we are entering an era where embedded systems are as advanced and dynamic as any software-centric field, shedding the old image of slow progress and inflexible designs.

Looking forward, these trends set the stage for even more exciting developments. Today’s edge AI could evolve into on-device neural networks that self-improve. RISC-V’s openness might catalyze a wave of custom silicon for specific applications (from neural processors to ultra-low-power sensors) that plug seamlessly into open software stacks. The convergence of open-source and safety-critical might finally crack the code on certifying open software for the highest levels of assurance, making everything safer. We might see a day where writing embedded software in unsafe C is as rare as writing desktop applications in assembly – not gone, but largely superseded by better tools. And with DevSecOps, perhaps firmware updates will become as uneventful and routine as app updates on your phone, leading to embedded devices that get better and more secure with age rather than being static boxes with frozen firmware.

In conclusion, 2025 finds embedded engineering at a thrilling inflection point. The community is leveraging the best of software innovation and applying it to embedded development. As an engineer, it’s a time to be authoritative in fundamentals yet open to new approaches, to be thoughtful about design but bold in adopting forward-looking technology. The trends highlighted are not just buzz — they are practical, here-and-now shifts changing how we work and what we can create. By understanding and embracing them, we are better equipped to build the next generation of embedded systems: ones that are smarter, safer, more reliable, and delivered faster than ever before. In the rapidly evolving landscape of technology, embedded systems are no longer playing catch-up; they’re helping lead the charge. Here’s to building the future, one embedded device at a time, with the trends of 2025 lighting the way.

/filters:background_color(white)/2025-05/medica-tn.png)

Medical devices have much more stringent requirements for continuous connectivity, and have greater certification challenges than those in other industries. In this video, Jeremiah Stanek explains...

/filters:background_color(white)/2024-04/SOMs%20vs%20SoCs%20cover%20image.png)

Choosing between SOMs or SoCs is an important choice for your project. Explore the benefits of each and how they can be leveraged to meet your specific design requirements.

/filters:background_color(white)/2024-04/What%20Is%20a%20System-on-Module%20Cover%20Image.png)

Ezurio's system-on-module solutions are built on the latest processors and wireless, and utilizing our long-term software support to give developers a secure, smart, connected IoT platform.